Architecture

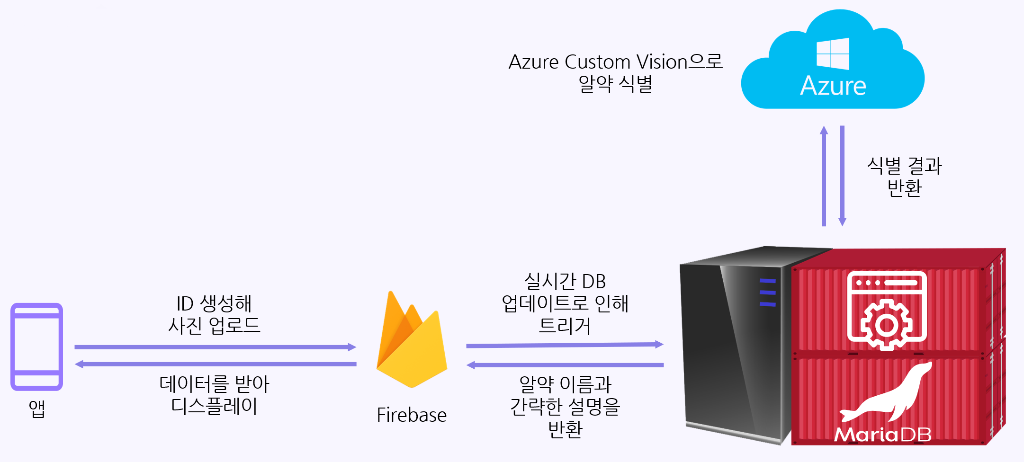

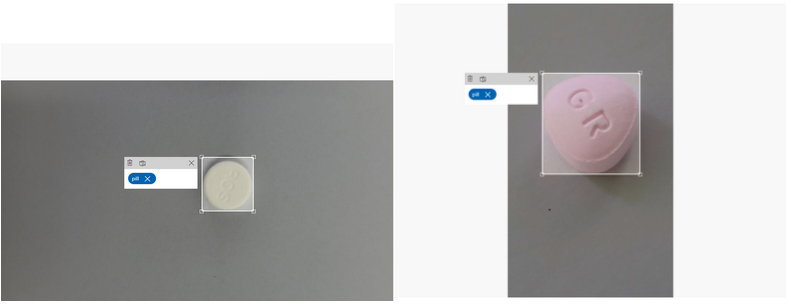

Case #1: Using the phone’s camera

- Users can take photos of a pill, and each photo will be uploaded to Firebase.

- For each photo, the server calls Azure Custom Vision to identify the pill.

- The server aggregates the results and lists them in the most likely order.

- The server fetches short descriptions of each pill using its name from the MariaDB database.

- Users will see a catalog of each pill’s name and description in a card list format.

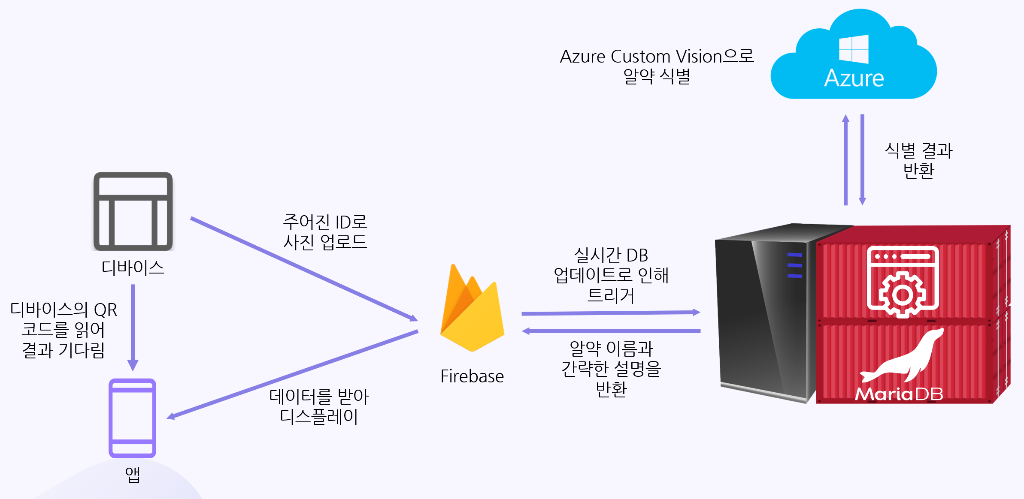

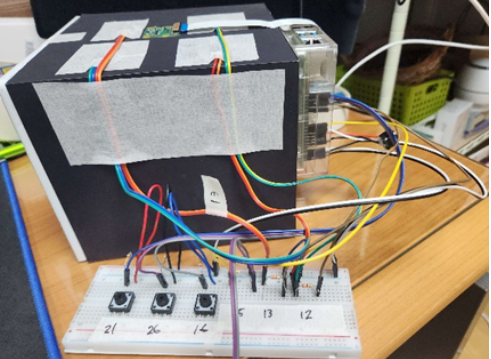

Case #2: Using a dedicated device to take photos

- To provide a stable environment for photos, a dedicated device is also available to use. This device can also provide ease of use for visually impaired users.

- The device works the same way as Case #1.

- Users can scan a QR code on the device and read the results.

Development Process

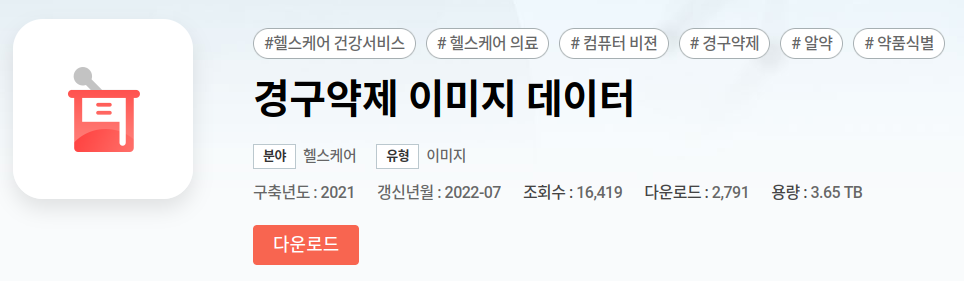

Training the pill recognition model

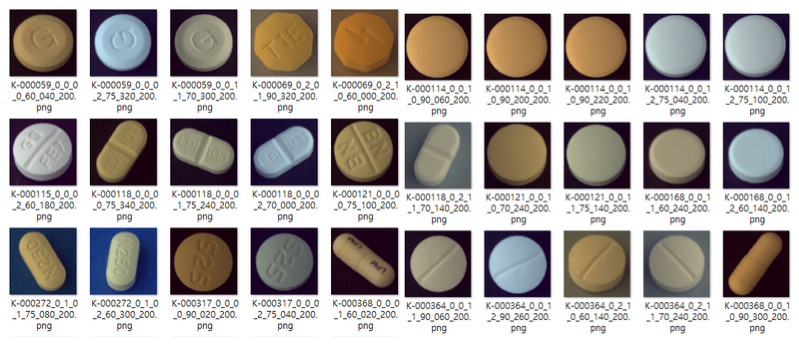

Our team used Azure’s Custom Vision, as we lacked the hardware and time to design and train our own model. We used pill imagery data available on AiHub:

https://aihub.or.kr/aihubdata/data/view.do?currMenu=&topMenu=&aihubDataSe=data&dataSetSn=576

This is a 3.6TB dataset covering 5,000 different pills distributed in Korea. Naturally due to its size we picked 100 pills from this dataset.

We encountered a problem while training where the accuracy wouldn’t improve any further. We theorized the back side of pills all looked the same, which led to degraded performance. A new model to detect a pill’s back side (checking if it was smooth) was implemented.

This model returns coordinates to crop the image to fit the pill, as we also found the background of the pill negatively impacted accuracy in the identification process.

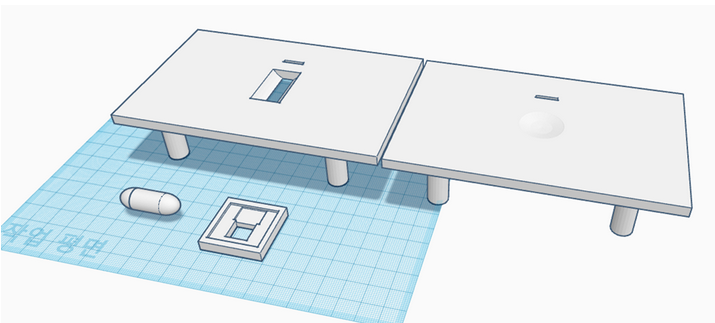

Designing the device

A Raspberry Pi with 3D-printed enclosure was designed to create a stable environment for taking images. The user can put the pill on the platform, and a slit in the middle of the platform will contain a roller that can roll the pill to take multiple angles if possible. However, this did not work as well as expected.